Increasing EBS disk space to a running EC2 instance

One of the best parts of running a cloud solution is the ability to make some “hardware” level changes without even a reboot.

First, in the AWS Console, go to the EBS Volume Screen and hopefully you have labelled your EBS volumes with human readable labels (or you will have to click on each volume and see what instance it is attached to)

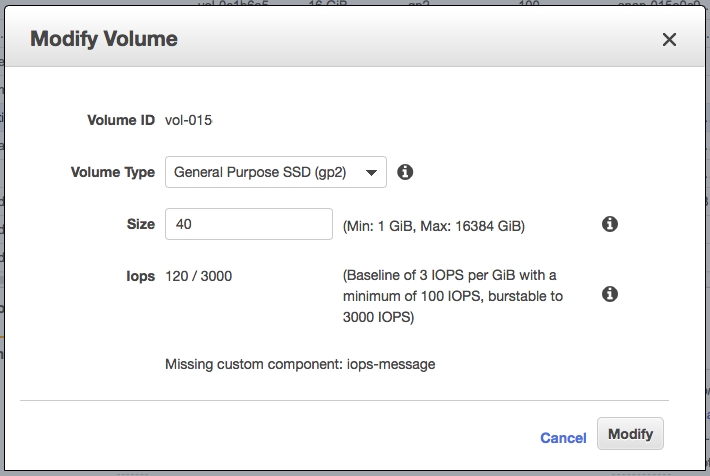

Right click and select Modify Volume and change its Size

Then SSH into the instance and if it is the only volume attached, you should be able to run the two command below:

sudo growpart /dev/xvda 1

sudo resize2fs /dev/xvda1If you have a newer EC2 instance with NVM storage, it will look more like this:

sudo growpart /dev/nvme0n1 1

sudo resize2fs /dev/nvme0n1p1> sudo growpart /dev/nvme1n1 1 CHANGED: partition=1 start=2048 old: size=16775135 end=16777183 new: size=25163743,end=25165791 > sudo resize2fs /dev/nvme1n1p1 resize2fs 1.44.1 (24-Mar-2018) Filesystem at /dev/nvme1n1p1 is mounted on /; on-line resizing required old_desc_blocks = 1, new_desc_blocks = 2 The filesystem on /dev/nvme1n1p1 is now 3145467 (4k) blocks long.

If you have multiple volumes attached or the above command fails, you will need to find the drive name by using lsblk

> lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT xvda 202:0 0 40G 0 disk └─xvda1 202:1 0 20G 0 part /

Resizing when No space left on device

If you are resizing the disk, its probably because you are low on disk space. Unfortunately when you are completely out, at 0 bytes of free disk space, you will run into another issue, the resize program can’t write its pid (process id) file which means it can’t start.

mkdir: cannot create directory ‘/tmp/growpart.7446’: No space left on device

We only need a couple bytes of space to store an integer pid, but their are a lot of other processes running on the machine that are also out of disk space and competing for any bytes that free up. So we actually need to carve out a reasonable size of space expecting it to be used up by other processes.

du -h /var/log/journal345M /var/log/journal/

We are hoping to see this folder size a few hundred megabytes. We are going to issue a command to the journald subsystem to clean up these logs, technically you can wipe out this folder but lets just free up a few hundred megabytes so we can resize the partition.

journalctl --disk-usage Archived and active journals take up 1.0G in the file system.

sudo journalctl --vacuum-size=300MAs soon as that finishes, go back and run the resize commands before something else uses that space. If even after clearing out the /var/log/journal folder still doesn’t get you enough, you will have to hunt around for any large files you can remove.

du -a / | sort -n -r | head -n 100(this commands takes a while to run)